- The Importance of Kernel Scheduling

- Recent Improvements in Kernel Scheduler

- Load Balancing Strategies

- 1. Static Load Balancing

- 2. Dynamic Load Balancing

- 3. Affinity Scheduling

- Challenges and Future Improvements

- Conclusion

In modern operating systems, efficient process management is critical for maintaining optimal performance, and the kernel scheduler plays a pivotal role in this aspect. A well-designed scheduler not only ensures fair process allocation but also enhances overall system responsiveness. Over the years, various improvements have been introduced in kernel scheduling mechanisms, focusing on load balancing as a key strategy for optimizing resource utilization across multiple processors.

The Importance of Kernel Scheduling

The kernel is the heartbeat of an operating system, responsible for managing system resources, including CPU time for various processes. Scheduling algorithms determine the order and allocation of CPU resources to processes, directly affecting system efficiency and responsiveness. An effective kernel scheduler can significantly improve application throughput and reduce latency, which is especially vital in real-time or high-performance computing environments.

Recent Improvements in Kernel Scheduler

Recent advancements in kernel scheduling have primarily focused on making the scheduler smarter and more adaptive. Key improvements include:

-

Multi-Core Optimization: With the widespread use of multi-core processors, modern schedulers have been re-engineered to better allocate processes across CPU cores. Techniques such as Enhanced Completely Fair Scheduler (CFS) dynamically adjust priorities and scheduling based on changing workload characteristics, ensuring optimal core utilization.

-

Real-Time Scheduling Enhancements: For applications requiring stringent deadlines, improvements have been made to real-time scheduling policies. The introduction of features like group scheduling allows users to define process groups, ensuring that essential tasks receive timely CPU access without starving other processes.

-

Profiling and Analytics: Utilizing performance profiling tools, modern kernels can analyze CPU usage patterns, identifying bottlenecks and optimizing scheduling decisions accordingly. This feedback loop enables the scheduler to adapt over time, learning from historical performance data.

Load Balancing Strategies

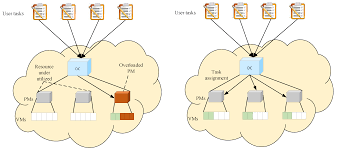

Load balancing is an essential aspect of kernel scheduling, especially in multi-processor environments. Effective load balancing reduces bottlenecks and improves system performance by distributing workloads evenly across available CPUs. Here are some commonly used strategies:

1. Static Load Balancing

In this method, workloads are allocated to processors based on a predetermined set of rules. While easy to implement, static strategies can suffer from inefficiencies, particularly if processes exhibit variable execution times.

2. Dynamic Load Balancing

Dynamic strategies adjust process allocation in real-time based on current CPU load. This can involve the migrating processes from overloaded cores to underutilized ones. Techniques such as task stealing enable idle CPUs to borrow work from busier ones, enhancing resource utilization.

3. Affinity Scheduling

Affinity scheduling aims to keep processes tied to the CPU cores they originally executed on. This affinity can significantly reduce cache misses and improve performance by ensuring that relevant data is likely still in the cache when a process resumes execution.

Challenges and Future Improvements

While significant strides have been made, challenges remain for kernel schedulers. Issues such as CPU cache contention, priority inversion, and meeting the varying demands of different workloads continue to present challenges. Future improvements may focus on integrating machine learning algorithms to predict workloads and adapt scheduling policies proactively.

Furthermore, as systems evolve to include more heterogeneous hardware, involving GPUs and specialized processors like TPUs, the kernel scheduler will need to adapt to accommodate these diverse computing elements seamlessly.

Conclusion

The journey of kernel scheduler improvements reflects an ongoing effort to enhance system performance and resource management. Load balancing strategies, crucial for achieving efficient processing in multi-core architectures, continue to evolve and adapt. As technology advances, the kernel scheduler will undoubtedly play an essential role in enabling faster, more efficient, and more responsive computing environments, ensuring that operating systems can meet the demands of tomorrow’s applications.